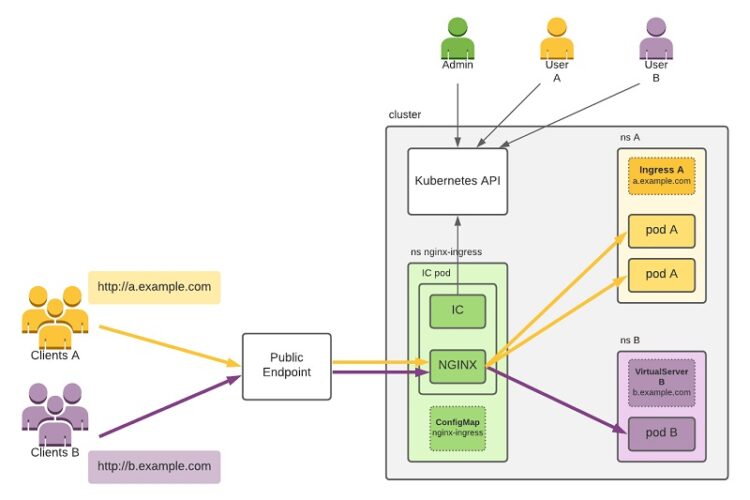

In Kubernetes, an ingress is a rule-based object that governs how users from outside the cluster may connect to and utilise the cluster’s services. Kubernetes applications may be exposed to people outside the enterprise using one of these three primary approaches like the NodePort service type in Kubernetes makes the application available on a port that is shared by all nodes. A load balancer is a Kubernetes service that directs users to other Kubernetes services in the cluster. Kubernetes Ingress Resource and an ingress controller may be used to expose the application to end users.

Use of the NodePort ingress controller

The service can be accessed through its IP address since there is an open NodePort on every node in the cluster. Since Kubernetes routes incoming traffic on the NodePort to the correct services, it is the simplest means of granting access.

Basic NodePort features are supported by any Kubernetes cluster; however, users operating in Google Cloud and users operating in other public cloud providers may need to modify firewall rules to make the system effective.

How This Works in the Large Field

If no port is specified, Kubernetes will choose one at random, which may not be in the best interest of the system. The fact that the system chooses a NodePort’s value at random from a predefined range (between 30000 and 32767 in the example configuration) is mostly useless. This range is reliably non-standard and avoids commonly used ports; moreover, the great majority of clients using either UDP or TCP will not encounter any issues as a result.

The fact that the system generates a new random port for each service adds another layer of complexity to the problem of not knowing these numbers in advance. As a result, setting up firewall rules, NAT, and related services becomes more complicated.

The NodePort is a useful abstraction that may be put to use in situations when a URL suitable for production is not necessary, such as while an application is still in development. This part is supposed to be used as a building block by higher-order ingress models like load balancers.

Equaliser of Efforts

The Load Balancer is an additional choice to think about. An external load balancer will be automatically deployed when the load balancer service type is selected. Traffic from the outside world is routed to a Kubernetes service operating in your cluster through an external load balancer that is assigned to a specific IP address.

However, this is only viable if your activities are already taking place in a cloud-hosted environment. The load balancer service type is also not available from all cloud providers, and its implementation varies from one cloud service provider to the next. In addition, a load balancer solution is required for bare-metal Kubernetes installations. The most unfavourable feature is probably the extra cost that results from launching a hosted load balancer and a fresh public IP address for each service of this kind.

However, load balancing is frequently the simplest and safest way to route traffic in environments where it can be implemented.

Conclusion

Kubernetes offers a high-level abstraction called Ingress. Using this abstraction, we may direct HTTP traffic based on the hostname or URL. Kubernetes’s Ingress resource is the recommended approach for exposing HTTP services to end users.